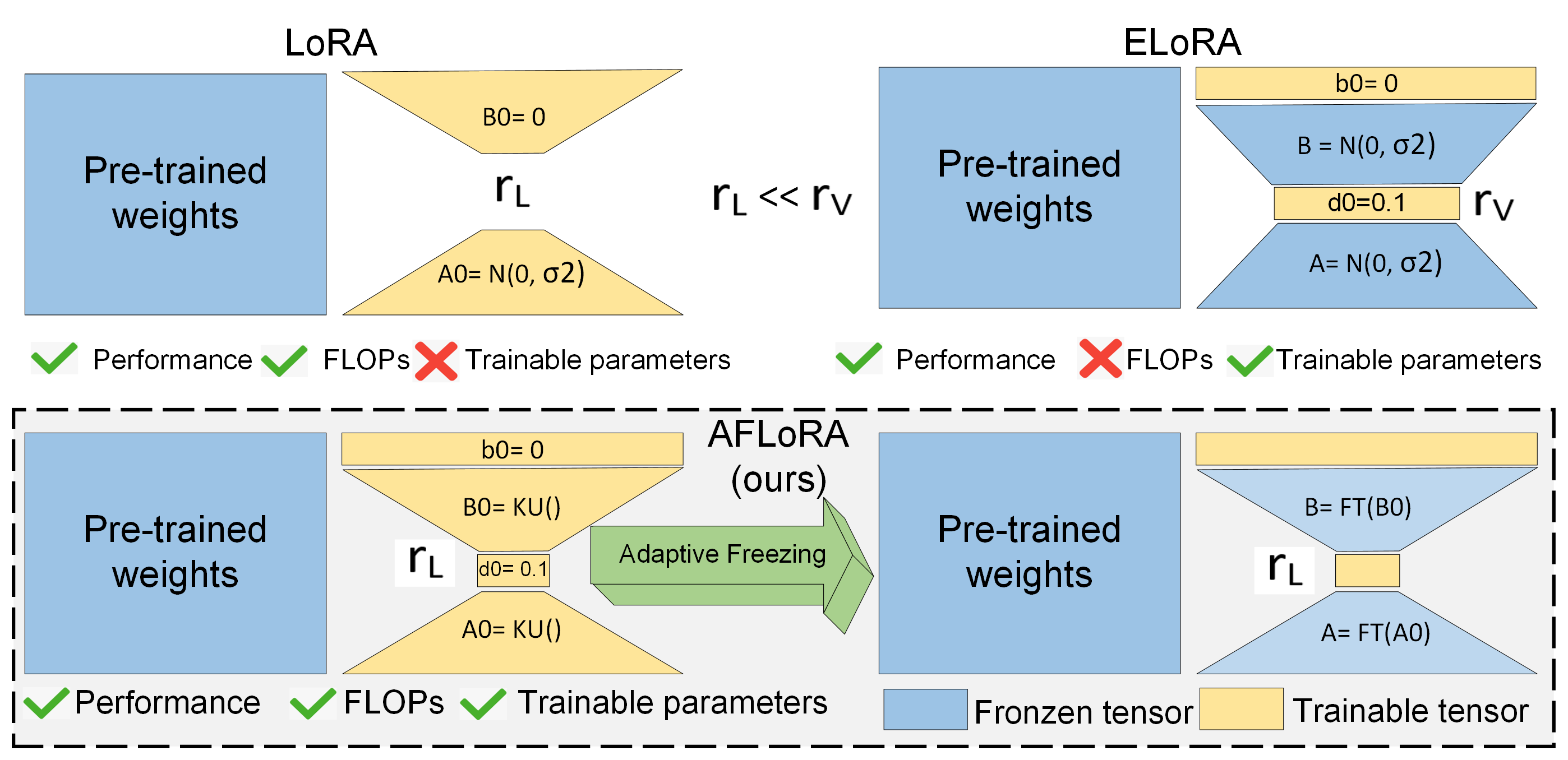

AFLoRA: Adaptive Freezing of Low Rank Adaptation in Parameter Efficient Fine-Tuning of Large Models

A novel Parameter-Efficient Fine-Tuning (PEFT) method that achieved SOTA performance while yeilding up to fewer average trainable parameters, runtime, and FLOPs.

ACL 2024, March 2024

03/2024

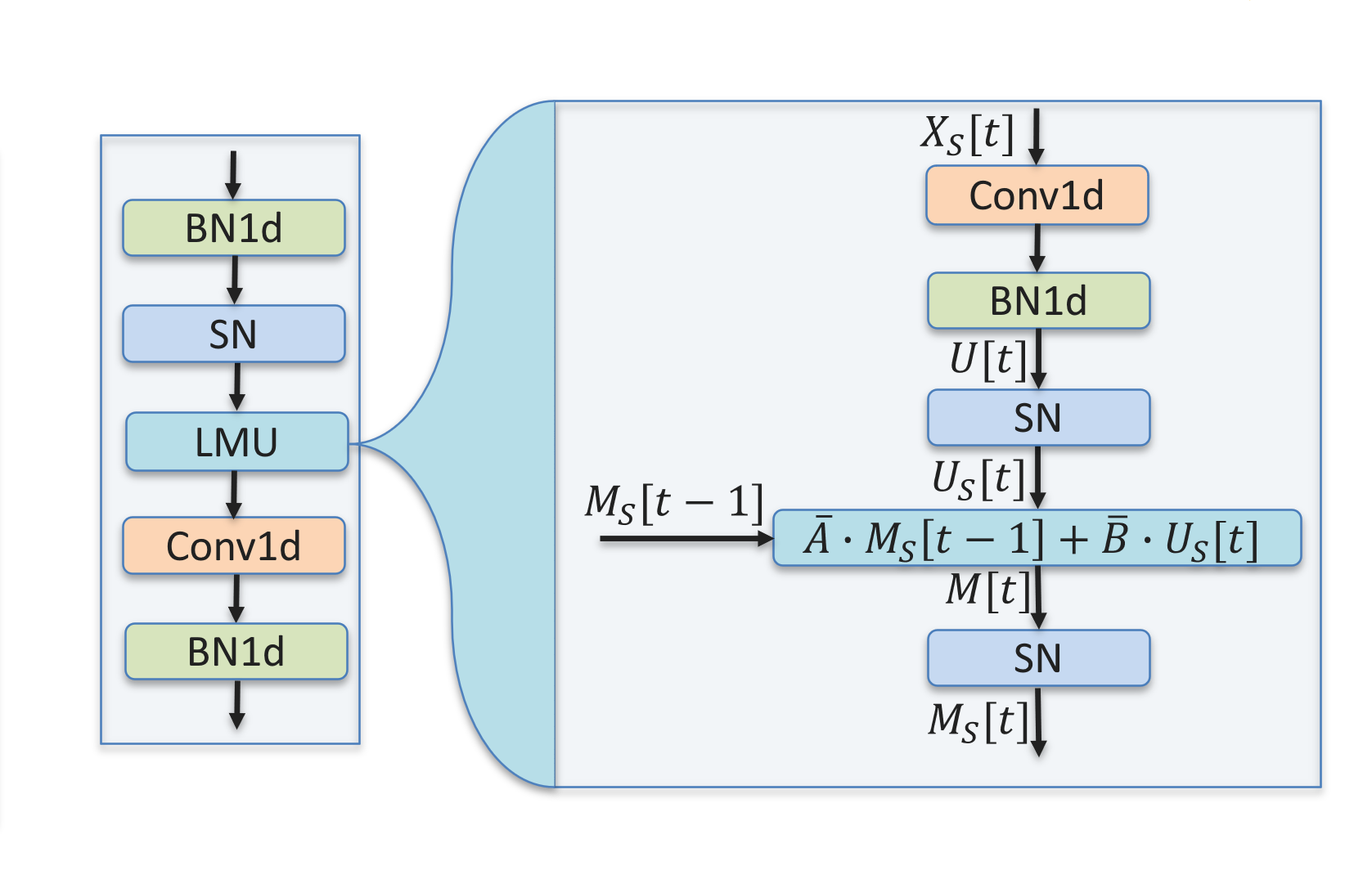

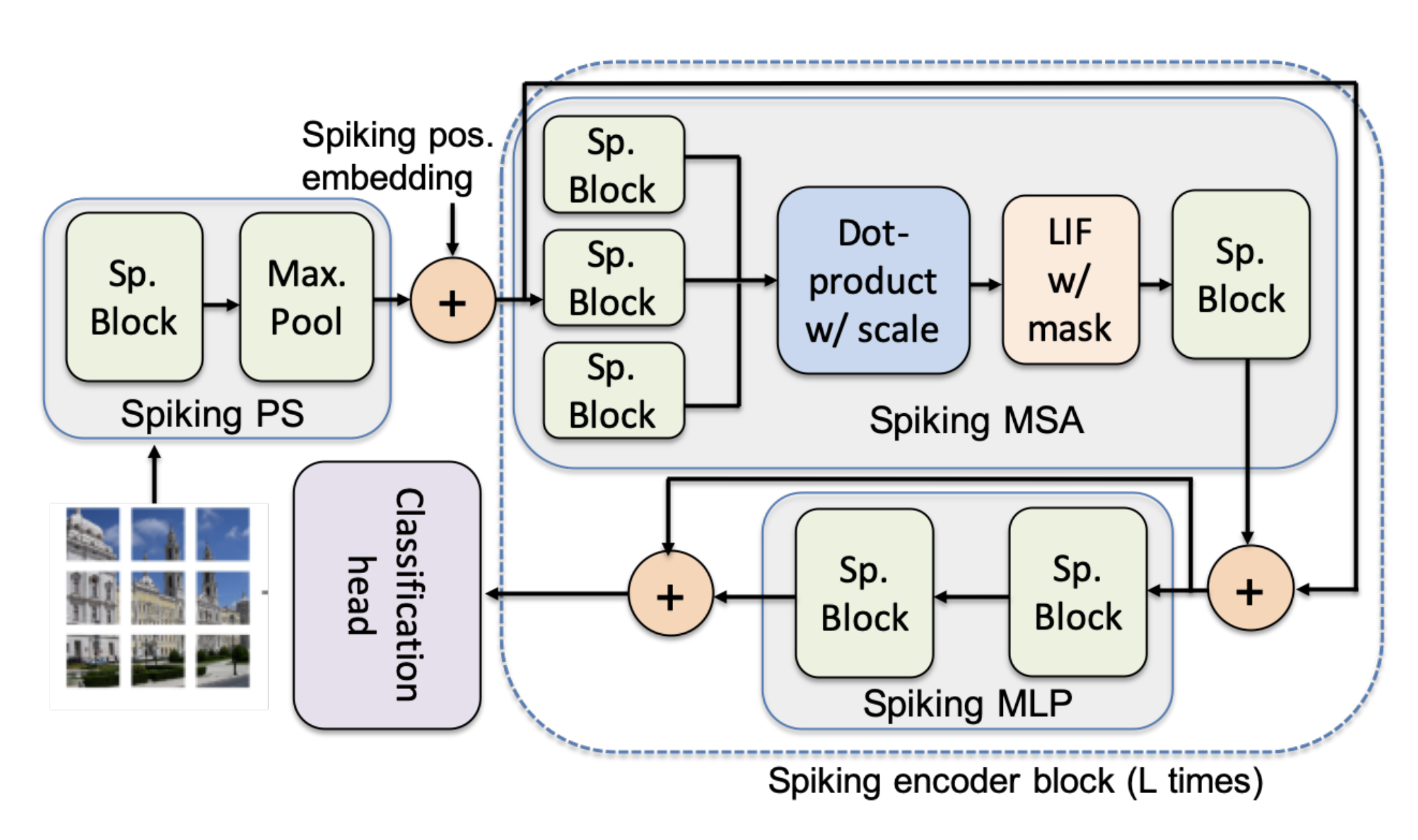

LMUFormer: Low Latency Yet Powerful Spiking Legendre Memory Units

A spiking model for long-sequential data that demonstrates comparable performance to Transformer and RNN models while necessitating a remarkable decrement in parameters and FLOPs.

ICLR 2024, January 2024

01/2024

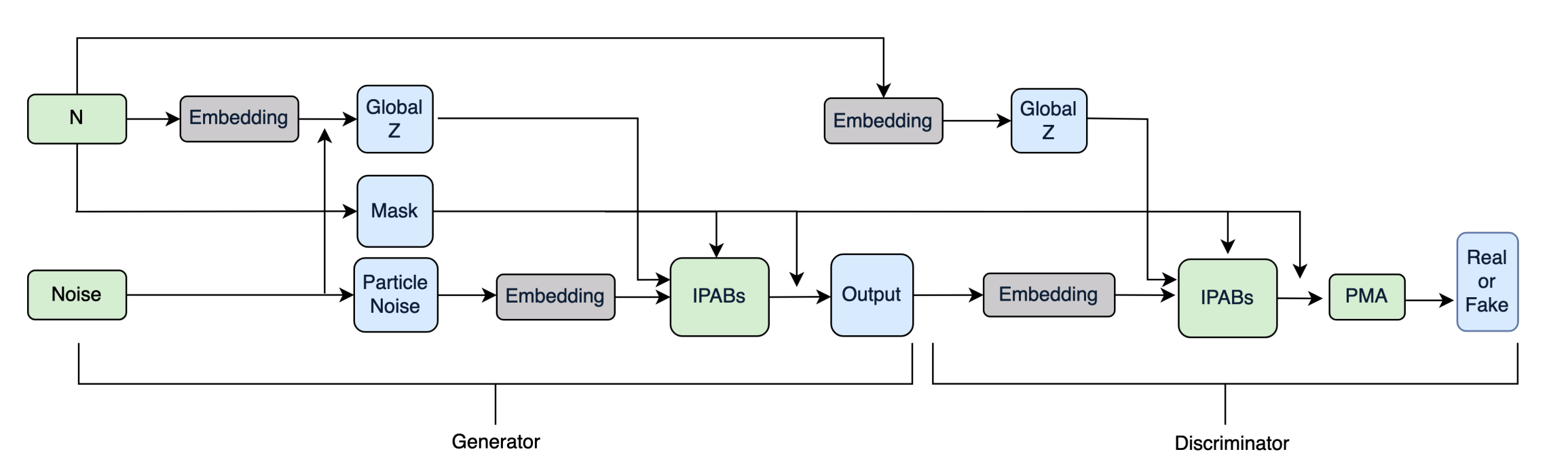

Induced Generative Adversarial Particle Transformers

A novel GAPT model that offers linear time complexity and captures intricate jet substructure, surpassing MPGAN in many metrics.

Workshop at the 37th conference on NeurIPS, December 2023

12/2023

Spiking Neural Networks with Dynamic Time Steps for Vision Transformers

A novel training framework that dynamically allocates the number of time steps to each ViT module depending on a trainable score assigned to each timestep.

November 2023

11/2023

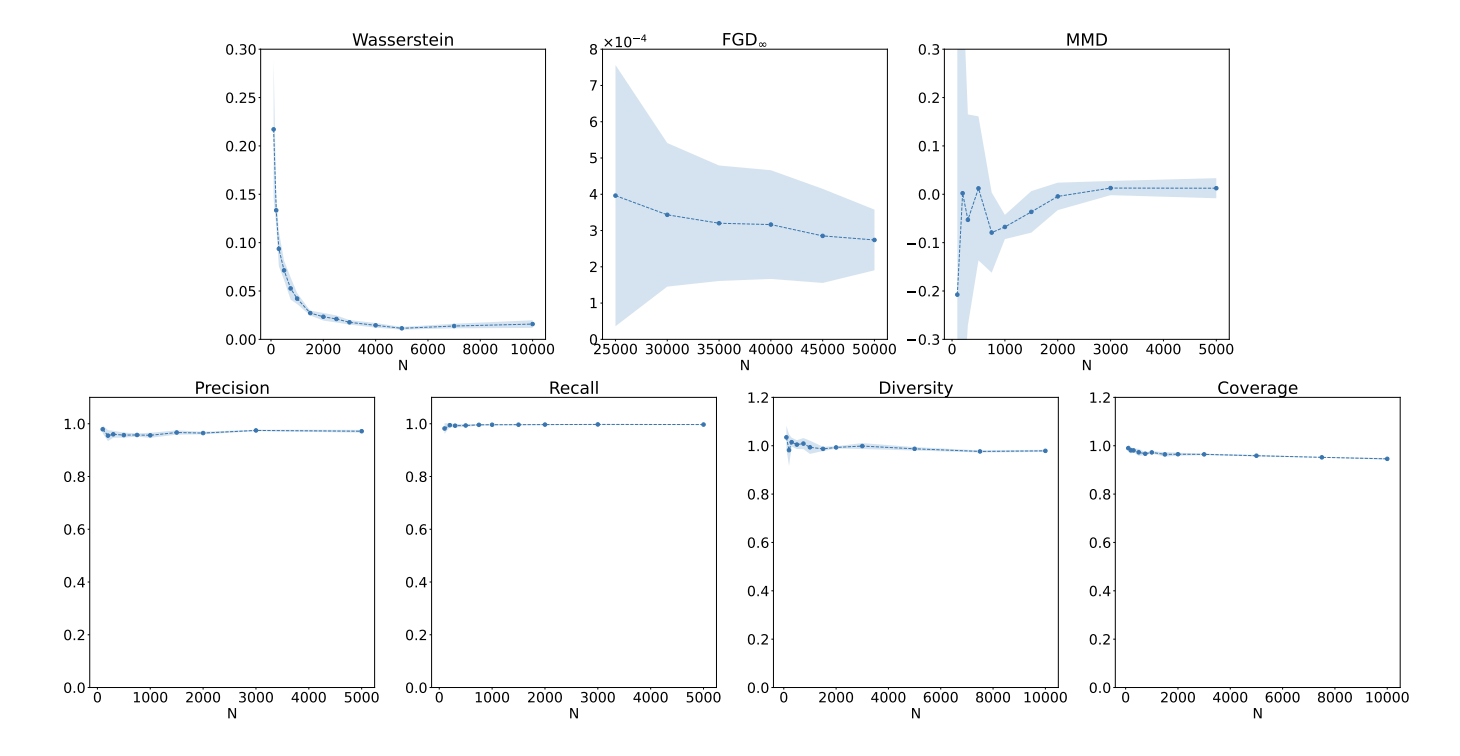

Evaluating Generative Models in High Energy Physics

The first systematic review and investigation into evaluation metrics and their sensitivity to failure modes of generative models, using the framework of two-sample goodness-of-fit testing, and their relevance and viability for HEP.

Physical Review D, 2022, November 2022

11/2022