Overview

LMUFormer is a novel spiking neural architecture that combines the advantages of Legendre Memory Units with transformer-like components, achieving remarkable efficiency in both parameters and computations while maintaining competitive performance.

Highlights

- Introduced a novel architecture combining LMU with convolutional patch embedding and channel mixer

- Achieved 53× reduction in parameters compared to SOTA transformer models

- Demonstrated 65× reduction in FLOPs while maintaining comparable performance

- Achieved 32.03% reduction in sequence length with minimal performance impact

Architecture

- Patch Embedding: Convolutional-based embedding for efficient feature extraction

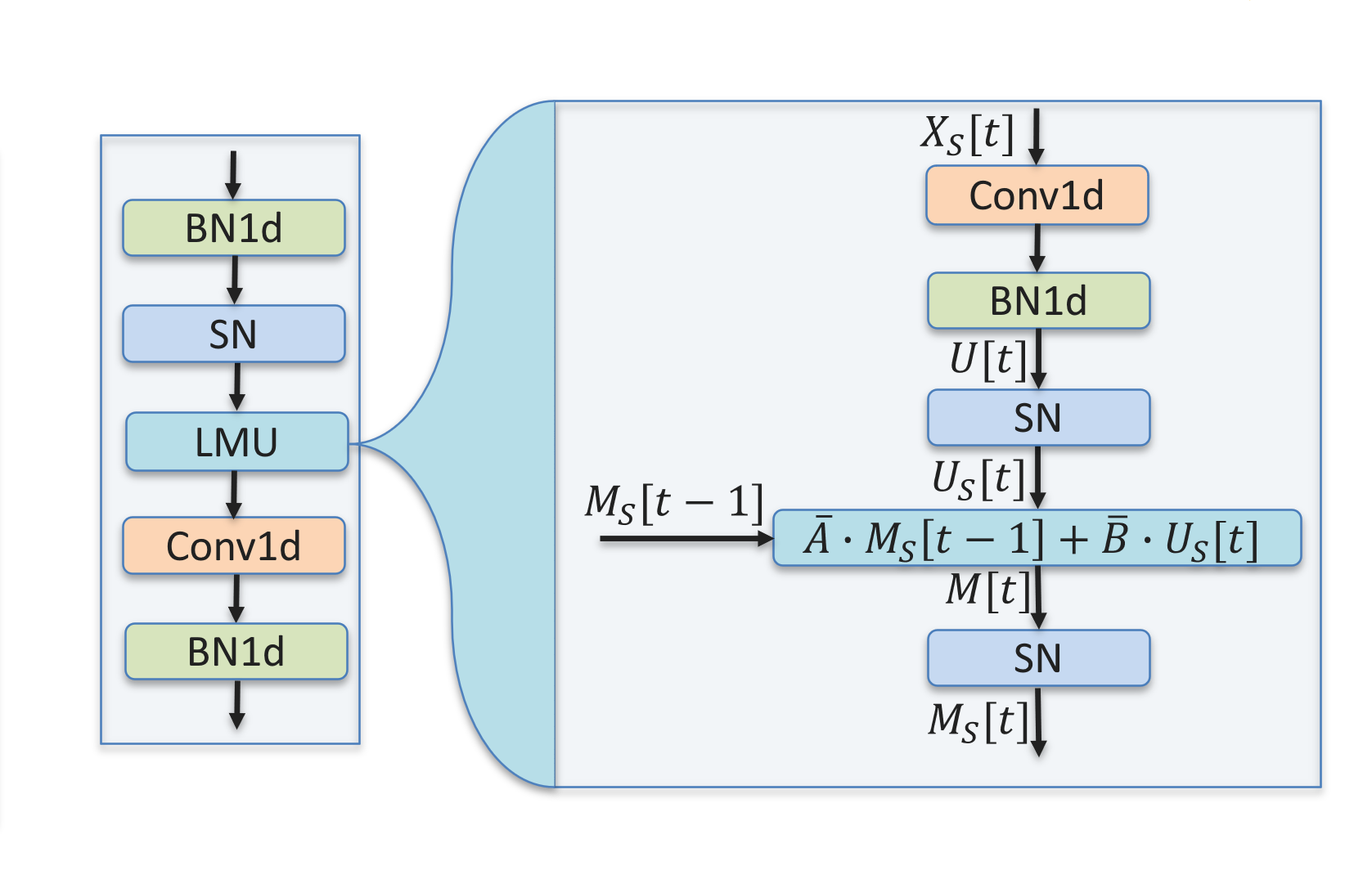

- LMU: Modified Legendre Memory Unit for enhanced temporal processing

- Channel Mixer: Convolutional-based mixing for improved feature interaction

- Spiking Integration: Novel approach to incorporate spiking mechanisms throughout the network