Overview

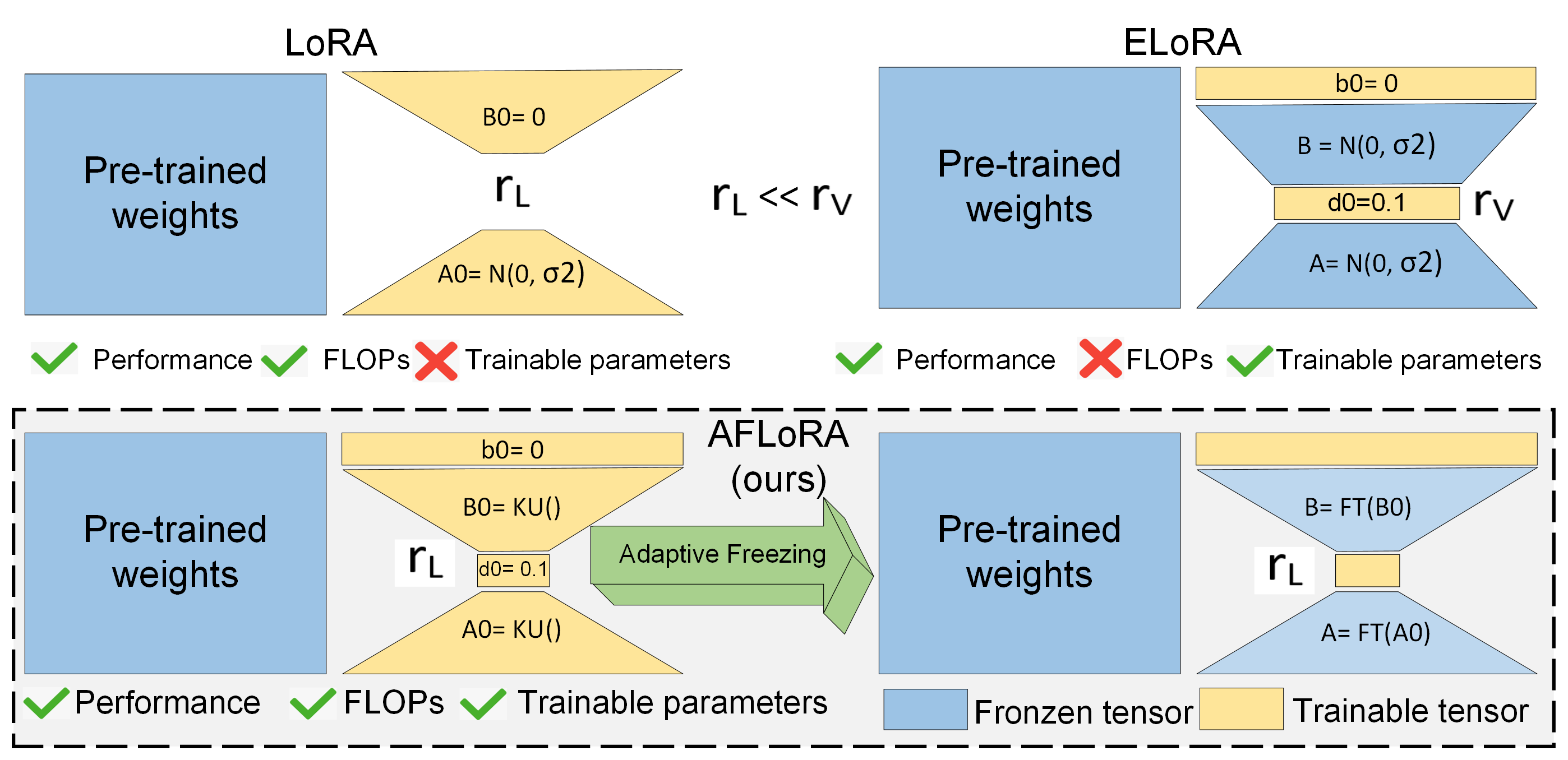

AFLoRA introduces an adaptive freezing mechanism to LoRA, achieving state-of-the-art performance in parameter-efficient fine-tuning while significantly reducing computational requirements.

Highlights

- Developed novel adaptive freezing strategy for LoRA paths

- Achieved 0.85% improvement on GLUE benchmark

- Reduced trainable parameters by 9.5×

- Improved runtime efficiency by 1.86×

Architecture

- Parallel LoRA Paths: Down-projection and up-projection matrices

- Feature Transformation: Vectors following projection matrices

- Freezing Score: Novel metric for determining parameter importance

- Adaptive Mechanism: Progressive freezing during fine-tuning